I’m 20 minutes into my first sales meeting after ChatGPT rocked the world just two days earlier...I know the question is coming.

“So why should we pay for VitalBriefing media monitoring/intelligence?” asks the prospect, acknowledging her global bank needs what we offer. “Why don’t we just use ChatGPT?”

And I’m ready with the answer.

But let’s come to that in a minute. First, a quick reminder of just what ChatGPT and its kind of Artificial Intelligence are, and what they’re not — at least so far and for the foreseeable future.

(Note that what I write about ChatGPT 1.0 today may well be far different than what I write about ChatGPT 20.0 in the not-so-distant future, if I haven’t been replaced by AI)

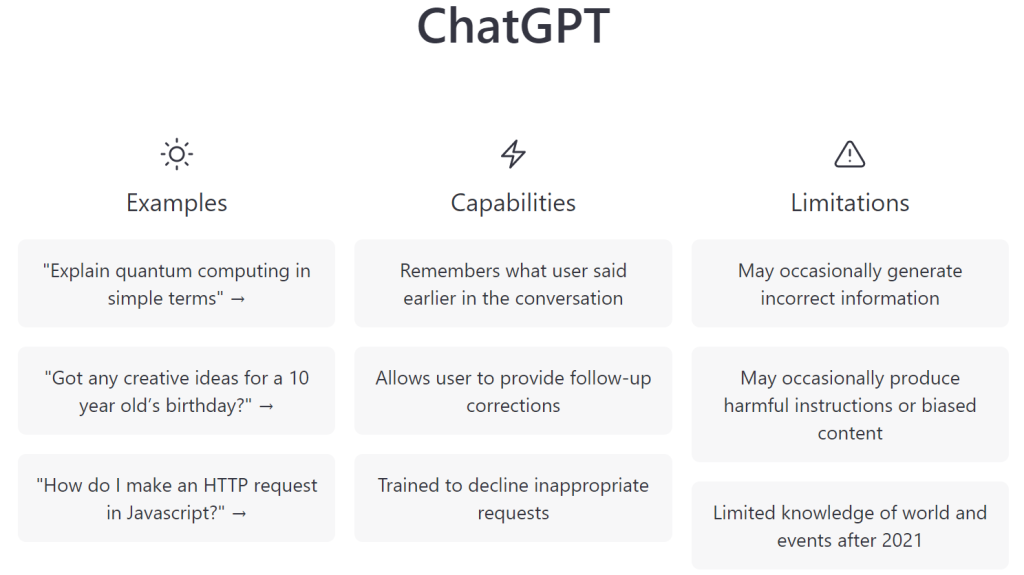

ChatGPT is a form of artificial intelligence called a “language model” that essentially takes an educated stab of a guess — or prediction — about what to write or how to answer questions based on its review of massive internet data. Developed by research company OpenAI (in which Microsoft has invested $11 billion), it describes its technology as “highly autonomous systems that outperform humans at most economically valuable work…The dialogue format makes it possible for ChatGPT to answer follow-up questions, admit its mistakes, challenge incorrect premises and reject inappropriate results.”

Side note: it also engages in somewhat frightening conversations like this one conducted by New York Times technology columnist Kevin Roose that has terrified people with a preview of potential sentience…until it collapses into truly bizarre, emotional-like incoherence (it claims to fall in obsessive love with Roose). That’s for another column.

What ChatGPT is

OpenAI co-founder and CEO Sam Altman explains in a tweet that ChatGPT is an “early demo of what is possible…Soon you will be able to have helpful assistants that talk to you, answer questions and give advice. Later you can have something that goes off and does tasks for you. Eventually, you can have something that goes off and discovers new knowledge for you.”

At VitalBriefing, we’ve been talking about AI for years and speculating on its potential impact both on our business and production processes, as well as for our craft of journalism. Even as we’re currently incorporating AI into our own technology, I put my cards on the table: as a journalist who ‘grew up’ both personally and professionally in the golden years of print journalism, my instinctive reaction to the whole subject is…well, sticking my head in the sand, or pulling the covers over it. But that simply isn’t an option.

In a way, it feels like another step in the ‘evolution’ — or devolution — of our profession over the past 20 years as the Internet, then social media, first impacted then essentially destroyed much of the foundation of what I was raised to believe mattered most: accuracy, reliability, truthfulness, objectivity, accountability and verifiability of the news as presented to audiences.

That disintegration, or disintermediation (removing responsible journalism as the filter or ‘quality control,’ if you will, between events and news consumers), came as traditional media business models were vaporised in the digital age and thousands of publications were shuttered, throwing many tens of thousands of journalists out of work.

More worrisome has been the decline of journalism that ‘speaks truth to power’ through investigative and deep-dive reporting at the local, national and international levels. That is the type of journalism that helps protect the proverbial guard rails of democracy in a number of countries where the conversion of free press into government mouthpieces, or suffering the Trump-inspired label of ‘enemy of the people’, has done incalculable damage to the free flow of information and access by the public to invaluable reporting and analysis.

Ultimately, and for the moment, it’s not sentient, even if it can sound frighteningly so. It’s a well-trained machine, fuelled by an extraordinarily large amount of data available on the internet and juiced by billions in investment from tech billionaires Peter Thiel and Reid Hoffman, among others. It’s trained on examples of back-and-forth conversation, which helps make its dialogue sound considerably more human.

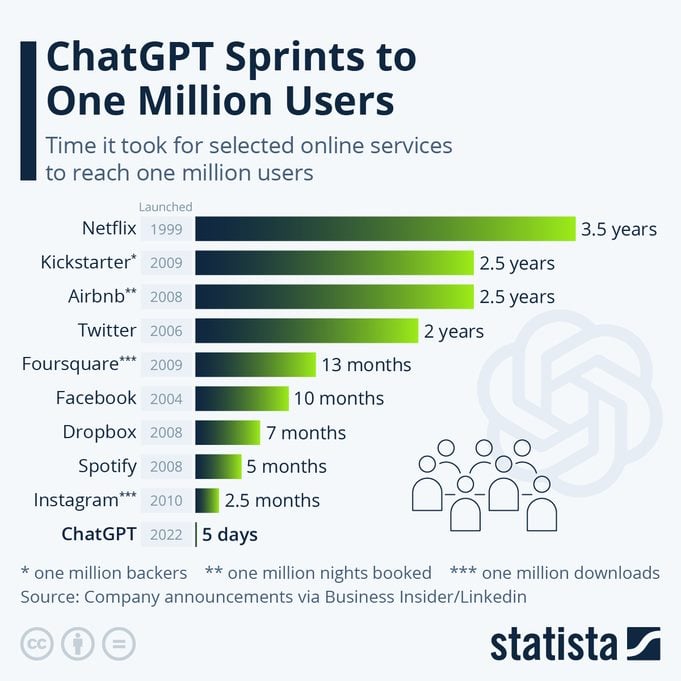

That ability from the AI has in many ways captured the zeitgeist, helping ChatGPT amass one million users at impressively breakneck speeds.

ChatGPT and journalism: does it present a danger?

So…what does ChatGPT and AI mean to the future of journalism? It’s hard to feel positive at the moment, so I went searching.

I know no one better to comment on that question that the distinguished chairman of our Global Advisory Board, David Schlesinger, who served as global editor-in-chief of Reuters, then Thomson Reuters, as well as the company’s chairman in China, and who has served on the boards of directors of two admirable and critical organisations – the Committee to Protect Journalists and the Index on Censorship.

‘“Anything that drives human journalism up the value chain is to be applauded — as long as there remains a healthy appetite for, and willingness to recognise the value of, the resulting deeper, better, richer result,” he answered.

“As AI creates more and more stories, from digesting press releases (themselves possibly the product of an AI-assisted PR agency) to creating ‘explainers’ of issues, then — in theory — the journalists can spend their time interviewing, investigating, analysing and furthering our knowledge, going beyond that which any backward-trained AI can do.”

So there’s the upside. Like David, I spent part of my formative years on often mind-numbing-to-produce reports — stock market, currencies, sports listings — that can now be better handled by AI.

Rising up the profession’s food chain, we were liberated to perform the kind of work that ‘matters’ — applying our shoe-leather skills and experience-earned wisdom to investigations, probing interviews, profiles and stories of import.

Yet, David identifies the danger that now looms — and that, frankly, fuels my concern.

“In our polarised world,” he asks, “the question remains whether any audience will value and pay for articles that challenge a world view, that investigate the status quo, or that uncover the truth behind a sacredly-held belief.

The worst case — and this is not the fault of the AI but the fault of humankind ourselves — is that AI-written pap will suffice, and we as a human race will be satisfied and content in our ignorance, while the AI bots become ever cleverer.

Remember the legend of the Golem — a figure created out of lifeless stuff intended to help and save its creator. The Golem, however, oft runs amok, imperilling the very world it should have saved.”

The real threat of ChatGPT

One of my friends — a former division head of a major US media company and now consultant, investor and independent board member — told me with exasperation that the day after the ChatGPT story broke, a fellow board member of one of his companies suggested it should lay off its entire editorial team and turn its duties over to ChatGPT.

“I rolled my eyes,” he said. “These people don’t understand how the thing works.”

That certainly lines up with what I’m hearing. VitalBriefing’s Chairman and my co-founder, Gerry Campbell, who has a lifetime of experience in search and text analysis, in part as Reuters/Thomson Reuters’ former President & Global Head of Technology, in part as a search technology patent-holder and expert among other roles, warns that the danger right now is in appearance:

“I see a perception threat,” he said. “‘Why wouldn’t I use AI?’ people ask. Because it’s believed to be better than it is. That perception is the biggest danger.”

If you’ve played around with ChatGPT, I’ll bet you’ve been impressed with the quality and coherence of the answers. I certainly have. That said, I’ve been just as impressed by the massive factual errors and inventions that show up — e.g. in answer to my egocentric request for a profile of myself, it described me as teaching at universities where I never taught, winning prizes I never won and working for companies I never worked for.

Farvest writer Samira Joineau asked the app how it determines relevance of information in constructing its answers.

“I do not have the ability to evaluate the relevance or importance of specific pieces of information,” it spewed. “So my responses may include information that is not directly related to your question or that may not be considered particularly important.”

And that brings us to the very basic question: Can it do even the most basic job of journalism: reporting the story?

A writer at the business magazine Fast Company used ChatGPT for the entire process of creating an article from scratch. He found it could not search and find interview subjects. While it could write questions for such interviews, not all were germane. It then misrepresented several of the answers received in email interviews.

And therein lies one of the ‘big problems’: AI doesn’t know or care what’s true and will invent ‘facts’ to fill gaps in its knowledge.

Here’s a proof point: CNET had its own highly-embarrassing experience recently when AI-written stories it ran were found to be riddled with inaccuracies.

Machines abound

Automation is everywhere in the media business — for example, delivering sports scores, corporate results and many mind-numbing lists, chats and quizzes. I don’t doubt that ChatGPT, among other AI offers, can help with these tasks.

In its current state, though, ChatGPT is only an AI language model, which means it doesn’t have the ability to search for articles or trawl the web for you in real-time and give you those results.

Therefore, it’s limited in the capacity it has to replace humans at their job (depending on their job). Ultimately, the real value-add it brings to a business is when it’s used within existing business functions and processes to save time and make tasks easier for workers. But it’s not at the point where it can replace the all-important ‘human element’.

So, after the exercise of writing this piece, I’ll sleep a little easier over whether AI is an existential threat to VitalBriefing, comfortable that while it can even help us with some of our essential tasks, it can’t do what we do – searching, finding and presenting customised, business-critical news and developments to our clients.

But I admit to tossing and turning a bit over the real battleground for the moment: the false perception that it could.

— — —

This article was produced with reporting by VitalBriefing Senior Editor James Badcock